Terraform tutorial

Terraform is an open source infrastructure as a code software tool. Terraform is a tool using which you can build, change and version infrastructure effectively using a high level configuration language called Hashicorp Configuration Language (HCL) or optionally JSON.

Terraform GCP tutorial

Terraform supports number of cloud infrastructure providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), IBM Cloud, Microsoft Azure, Oracle Cloud Infrastructure etc.,

In this tutorial we will see how to use Terraform on Google Cloud Platform to create VM in GCP and start a basic Python Flask server.

Pre-requisites

You need to create free trial GCP account with $300 credit if you don’t have already and have the following tools installed/ setup locally.

- Existing SSH key

- Terraform

Project and Credentials

Create GCP project and then you need to set up a service account key which can be used in Terraform configuration file to create and manage resources of your GCP project.

Create service account key and download JSON file ( key.json ) by clicking Create button. This file has the credentials that is needed by Terraform to manage the resources on GCP. You need to place this file in secured location. But for tutorial purpose I had placed this file in the “myterraformproj” project directory that is created newly as shown below

$ sneppets@cloudshell:~$ mkdir myterraformproj $ sneppets@cloudshell:~$ cd myterraformproj/ $ sneppets@cloudshell:~/myterraformproj$ ls key.json

Setup Terraform

Create a main.tf file for Terraform config in the “myterraformproj” directory and the contents of the file as shown below

$ sneppets@cloudshell:~/myterraformproj$ sudo vi main.tf

// Configure the Google Cloud provider

provider "google" {

credentials = "${file("key.json")}" //service account key json file

project = "sneppets-gcp" //project id

region = "us-west1"

}

$ sneppets@cloudshell:~/myterraformproj$ ls

key.json main.tf

Credentials section of the file should point to the key.json file that was downloaded in the last step, and set the project ID to the project property. The provider “google” indicates that you are using Google Cloud Terraform provider. At this point you can run the following command to download the latest version of provider

$ sneppets@cloudshell:~/myterraformproj$ sudo terraform init

sneppets@cloudshell:~/myterraformproj$ sudo terraform init Initializing the backend... Initializing provider plugins... - Checking for available provider plugins... - Downloading plugin for provider "google" (hashicorp/google) 3.4.0... The following providers do not have any version constraints in configuration, so the latest version was installed. To prevent automatic upgrades to new major versions that may contain breaking changes, it is recommended to add version = "..." constraints to the corresponding provider blocks in configuration, with the constraint strings suggested below. * provider.google: version = "~> 3.4" Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

Configure Compute Engine resource

To create a single compute engine instance running Debian update main.tf configuration file to use the smallest instance as shown below

// Configure the Google Cloud provider

provider "google" {

credentials = "${file("key.json")}"

project = "sneppets-gcp"

region = "us-west1"

}

//Terraform plugin for creating new ids

resource "random_id" "instance_id" {

byte_length = 8

}

//A single google compute engine instance

resource "google_compute_instance" "default" {

name = "flask-vm-${random_id.instance_id.hex}"

machine_type = "f1-micro"

zone = "us-west1-a"

boot_disk {

initialize_params {

image = "debian-cloud/debian-9"

}

}

// Make sure flask is installed on all new instances for later steps

metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask"

network_interface {

network = "default"

access_config {}

// Include this section to give the VM an external ip address

}

}

In the above updated main.tf, the Terraform plugin config is used to create random instance and it requires additional plugin to be installed, so you need to run terraform init command again to download and install any additional plugins required by GCP.

sneppets@cloudshell:~/myterraformproj$ sudo terraform init Initializing the backend... Initializing provider plugins... - Checking for available provider plugins... - Downloading plugin for provider "random" (hashicorp/random) 2.2.1... The following providers do not have any version constraints in configuration, so the latest version was installed. To prevent automatic upgrades to new major versions that may contain breaking changes, it is recommended to add version = "..." constraints to the corresponding provider blocks in configuration, with the constraint strings suggested below. * provider.google: version = "~> 3.4" * provider.random: version = "~> 2.2" Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

To validate the new compute engine instance or to validate the configurations that you had updated in main.tf file so far you need to run terraform plan, which will verify the syntax in that config file, and check whether credentials file exists or not. Also this command shows a preview what will be created.

sneppets@cloudshell:~/myterraformproj$ sudo terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.default will be created

+ resource "google_compute_instance" "default" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ deletion_protection = false

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "f1-micro"

+ metadata_fingerprint = (known after apply)

+ metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask"

+ min_cpu_platform = (known after apply)

+ name = (known after apply)

+ project = (known after apply)

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ zone = "us-west1-a"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "debian-cloud/debian-9"

+ labels = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ network_interface {

+ name = (known after apply)

+ network = "default"

+ network_ip = (known after apply)

+ subnetwork = (known after apply)

+ subnetwork_project = (known after apply)

}

+ scheduling {

+ automatic_restart = (known after apply)

+ on_host_maintenance = (known after apply)

+ preemptible = (known after apply)

+ node_affinities {

+ key = (known after apply)

+ operator = (known after apply)

+ values = (known after apply)

}

}

}

# random_id.instance_id will be created

+ resource "random_id" "instance_id" {

+ b64 = (known after apply)

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 8

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

}

Plan: 2 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

Now its time to perform terraform apply and terraform will call GCP API’s to setup the new instance.

$ sneppets@cloudshell:~/myterraformproj$ sudo terraform apply

sneppets@cloudshell:~/myterraformproj$ sudo terraform apply

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_compute_instance.default will be created

+ resource "google_compute_instance" "default" {

+ can_ip_forward = false

+ cpu_platform = (known after apply)

+ deletion_protection = false

+ guest_accelerator = (known after apply)

+ id = (known after apply)

+ instance_id = (known after apply)

+ label_fingerprint = (known after apply)

+ machine_type = "f1-micro"

+ metadata_fingerprint = (known after apply)

+ metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask"

+ min_cpu_platform = (known after apply)

+ name = (known after apply)

+ project = (known after apply)

+ self_link = (known after apply)

+ tags_fingerprint = (known after apply)

+ zone = "us-west1-a"

+ boot_disk {

+ auto_delete = true

+ device_name = (known after apply)

+ disk_encryption_key_sha256 = (known after apply)

+ kms_key_self_link = (known after apply)

+ mode = "READ_WRITE"

+ source = (known after apply)

+ initialize_params {

+ image = "debian-cloud/debian-9"

+ labels = (known after apply)

+ size = (known after apply)

+ type = (known after apply)

}

}

+ network_interface {

+ name = (known after apply)

+ network = "default"

+ network_ip = (known after apply)

+ subnetwork = (known after apply)

+ subnetwork_project = (known after apply)

}

+ scheduling {

+ automatic_restart = (known after apply)

+ on_host_maintenance = (known after apply)

+ preemptible = (known after apply)

+ node_affinities {

+ key = (known after apply)

+ operator = (known after apply)

+ values = (known after apply)

}

}

}

# random_id.instance_id will be created

+ resource "random_id" "instance_id" {

+ b64 = (known after apply)

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 8

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

}

Plan: 2 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

random_id.instance_id: Creating...

random_id.instance_id: Creation complete after 0s [id=kD0IkFJSuPI]

google_compute_instance.default: Creating...

google_compute_instance.default: Still creating... [10s elapsed]

google_compute_instance.default: Creation complete after 11s [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

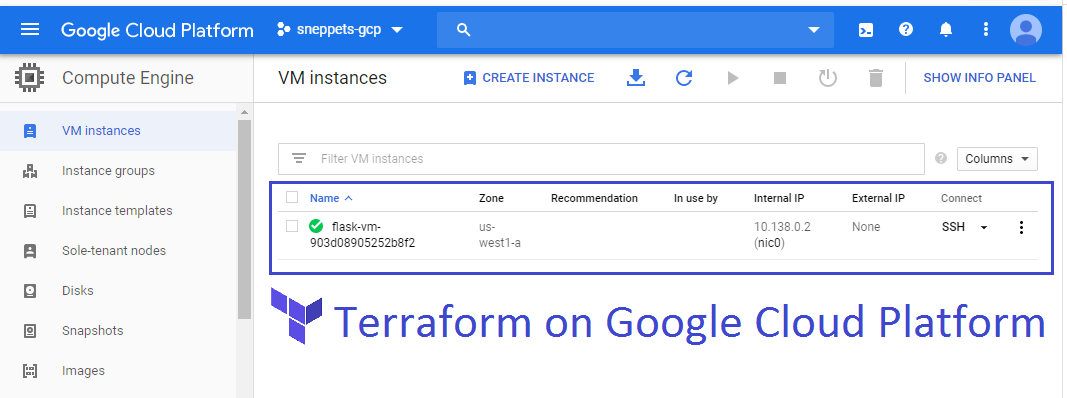

You can also check the VM instance page to view the new instance that is created.

Run a server on GCP

In the previous steps you had created VM instance, running in GCP. Nesxt step is to create a web application and deploy it to the VM instance and expose an endpoint.

You can add SSH access to the Compute Engine Instance as shown, so that you can access and manage it. You can generate a key using the ssh-keygen tool.

sneppets@cloudshell:~/myterraformproj$ sudo ssh-keygen -t rsa -f public_key -C sneppets Generating public/private rsa key pair. public_key already exists. Overwrite (y/n)? y Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in public_key. Your public key has been saved in public_key.pub. The key fingerprint is: SHA256:EijaO/aKFzRpSlQsP4L+enri1dcPMpQDVWzvXQd07PQ sneppets The key's randomart image is: +---[RSA 2048]----+ | o. .o. ....| | o . .. o ..o| |o +.... . . +.| |.==+ ... . .E| |++o.. .+S . . . .| |..... ..o . . | | =o . + o | | +o* . o o | |o=B.. . | +----[SHA256]-----+ sneppets@cloudshell:~/myterraformproj$ ls key.json main.tf public_key public_key.pub terraform.tfstate

Add the location of the public key public_key.pub to the google_compute_instance metadata in main.tf file in order to add SSH key to the VM instance as shown below.

//A single google compute engine instance

resource "google_compute_instance" "default" {

name = "flask-vm-${random_id.instance_id.hex}"

machine_type = "f1-micro"

zone = "us-west1-a"

metadata = {

ssh-keys = "<YOUR_USERNAME>:${file("public_key.pub")}"

}

boot_disk {

initialize_params {

image = "debian-cloud/debian-9"

}

}

Note, replace <YOUR_USERNAME> with your username and run terraform plan to verify. Once verified, you can run terraform apply to apply the changes.

sneppets@cloudshell:~/myterraformproj$ sudo terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

random_id.instance_id: Refreshing state... [id=kD0IkFJSuPI]

google_compute_instance.default: Refreshing state... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

sneppets@cloudshell:~/myterraformproj$ sudo terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

random_id.instance_id: Refreshing state... [id=kD0IkFJSuPI]

google_compute_instance.default: Refreshing state... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_compute_instance.default will be updated in-place

~ resource "google_compute_instance" "default" {

can_ip_forward = false

cpu_platform = "Intel Broadwell"

deletion_protection = false

enable_display = false

guest_accelerator = []

id = "projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2"

instance_id = "7523967124818997908"

label_fingerprint = "42WmSpB8rSM="

labels = {}

machine_type = "f1-micro"

~ metadata = {

~ "ssh-keys" = <<~EOT

sneppets:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDF/+suASr16QnQn76UKtT2SIN4QC6DCwG+X9YBxG0wTJTZoFojHgsxL0vkUUg8Rtf06rEGO2VKPxkg3k+N2Lja2jaPtyC/6VSlKk+wB0EIaUvsbcU3j3QLTzJB4QKqHd0I8xtcRMwHnEf25HgBHWoaB6uK9i0RKFPqSSk/ODAi8xeyN3+MEA3OK1yd2gfdbVLJ4qyiB6h6gKeOOdQnF2aAC7aZnYYX1PQtAzsRTilM/5ucGCKheOAmDRtGCIy5+b39i+Y9ea6XuWuWFh3PdqzjBGstgxSLOy4mUK4n/a2t6Hj4AtAWP2WUrN2IHT6ez+qZk0JETjlBYUYDCPc5IUxz sneppets

EOT

}

metadata_fingerprint = "z3O3A4ukFwg="

metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask"

name = "flask-vm-903d08905252b8f2"

project = "sneppets-gcp"

self_link = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2"

tags = []

tags_fingerprint = "42WmSpB8rSM="

zone = "us-west1-a"

boot_disk {

auto_delete = true

device_name = "persistent-disk-0"

mode = "READ_WRITE"

source = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/disks/flask-vm-903d08905252b8f2"

initialize_params {

image = "https://www.googleapis.com/compute/v1/projects/debian-cloud/global/images/debian-9-stretch-v20191210"

labels = {}

size = 10

type = "pd-standard"

}

}

network_interface {

name = "nic0"

network = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/global/networks/default"

network_ip = "10.138.0.2"

subnetwork = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/regions/us-west1/subnetworks/default"

subnetwork_project = "sneppets-gcp"

}

scheduling {

automatic_restart = true

on_host_maintenance = "MIGRATE"

preemptible = false

}

}

Plan: 0 to add, 1 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

Use output variables for ip address

You can use Terraform output variable to expose the VM instance’s ip address. Add the below section in the main.tf file.

// A variable for extracting the external ip of the instance

output "ip" {

value = "${google_compute_instance.default.network_interface.0.access_config.*.nat_ip}"

}

Now run terraform apply followed by terraform output ip to get the instance’s external ip address.

sneppets@cloudshell:~/myterraformproj$ sudo terraform apply

random_id.instance_id: Refreshing state... [id=kD0IkFJSuPI]

google_compute_instance.default: Refreshing state... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

~ update in-place

Terraform will perform the following actions:

# google_compute_instance.default will be updated in-place

~ resource "google_compute_instance" "default" {

can_ip_forward = false

cpu_platform = "Intel Broadwell"

deletion_protection = false

enable_display = false

guest_accelerator = []

id = "projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2"

instance_id = "7523967124818997908"

label_fingerprint = "42WmSpB8rSM="

labels = {}

machine_type = "f1-micro"

~ metadata = {

~ "ssh-keys" = <<~EOT

sneppets:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDF/+suASr16QnQn76UKtT2SIN4QC6DCwG+X9YBxG0wTJTZoFojHgsxL0vkUUg8Rtf06rEGO2VKPxkg3k+N2Lja2jaPtyC/6VSlKk+wB0EIaUvsbcU3j3QLTzJB4QKqHd0I8xtcRMwHnEf25HgBHWoaB6uK9i0RKFPqSSk/ODAi8xeyN3+MEA3OK1yd2gfdbVLJ4qyiB6h6gKeOOdQnF2aAC7aZnYYX1PQtAzsRTilM/5ucGCKheOAmDRtGCIy5+b39i+Y9ea6XuWuWFh3PdqzjBGstgxSLOy4mUK4n/a2t6Hj4AtAWP2WUrN2IHT6ez+qZk0JETjlBYUYDCPc5IUxz sneppets

EOT

}

metadata_fingerprint = "z3O3A4ukFwg="

metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask"

name = "flask-vm-903d08905252b8f2"

project = "sneppets-gcp"

self_link = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2"

tags = []

tags_fingerprint = "42WmSpB8rSM="

zone = "us-west1-a"

boot_disk {

auto_delete = true

device_name = "persistent-disk-0"

mode = "READ_WRITE"

source = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/disks/flask-vm-903d08905252b8f2"

initialize_params {

image = "https://www.googleapis.com/compute/v1/projects/debian-cloud/global/images/debian-9-stretch-v20191210"

labels = {}

size = 10

type = "pd-standard"

}

}

~ network_interface {

name = "nic0"

network = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/global/networks/default"

network_ip = "10.138.0.2"

subnetwork = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/regions/us-west1/subnetworks/default"

subnetwork_project = "sneppets-gcp"

+ access_config {}

}

scheduling {

automatic_restart = true

on_host_maintenance = "MIGRATE"

preemptible = false

}

}

Plan: 0 to add, 1 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

google_compute_instance.default: Modifying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

google_compute_instance.default: Still modifying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2, 10s elapsed]

google_compute_instance.default: Modifications complete after 12s [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

Outputs:

ip = [

"34.83.17.111",

]

sneppets@cloudshell:~/myterraformproj$ sudo terraform output ip [ "34.83.17.111", ]

Build web application

Next, you will be building a Python Flask app to have a single file that describes your web server and test the endpoints. Add the following code in file app.py inside the VM instance in GCP.

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello_cloud():

return 'Hello Cloud!'

app.run(host='0.0.0.0')

Then run this command python app.py

sneppets@cloudshell:~/myterraformproj$ sudo python app.py ******************************************************************************** Python command will soon point to Python v3.7.3. Python 2 will be sunsetting on January 1, 2020. See http://https://www.python.org/doc/sunset-python-2/ Until then, you can continue using Python 2 at /usr/bin/python2, but soon /usr/bin/python symlink will point to /usr/local/bin/python3. To suppress this warning, create an empty ~/.cloudshell/no-python-warning file. The command will automatically proceed in seconds or on any key. ******************************************************************************** * Serving Flask app "app" (lazy loading) * Environment: production WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead. * Debug mode: off * Running on http://0.0.0.0:5000/ (Press CTRL+C to quit)

Flask app is now runnning on http://localhost:5000/ by default.

Cleaning Up

Once you are done, you will likely want to clean up resources and everything, so that you won’t get charged unnecessarily. Good thing that Terraform support is the command terraform destroy will let you remove all the resources defined in the main.tf configuration file.

snepppets@cloudshell:~/myterraformproj$ sudo terraform destroy

random_id.instance_id: Refreshing state... [id=kD0IkFJSuPI]

google_compute_instance.default: Refreshing state... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# google_compute_instance.default will be destroyed

- resource "google_compute_instance" "default" {

- can_ip_forward = false -> null

- cpu_platform = "Intel Broadwell" -> null

- deletion_protection = false -> null

- enable_display = false -> null

- guest_accelerator = [] -> null

- id = "projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2" -> null

- instance_id = "7523967124818997908" -> null

- label_fingerprint = "42WmSpB8rSM=" -> null

- labels = {} -> null

- machine_type = "f1-micro" -> null

- metadata = {

- "ssh-keys" = "sneppets:ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDF/+suASr16QnQn76UKtT2SIN4QC6DCwG+X9YBxG0wTJTZoFojHgsxL0vkUUg8Rtf06rEGO2VKPxkg3k+N2Lja2jaPtyC/6VSlKk+wB0EIaUvsbcU3j3QLTzJB4QKqHd0I8xtcRMwHnEf25HgBHWoaB6uK9i0RKFPqSSk/ODAi8xeyN3+MEA3OK1yd2gfdbVLJ4qyiB6h6gKeOOdQnF2aAC7aZnYYX1PQtAzsRTilM/5ucGCKheOAmDRtGCIy5+b39i+Y9ea6XuWuWFh3PdqzjBGstgxSLOy4mUK4n/a2t6Hj4AtAWP2WUrN2IHT6ez+qZk0JETjlBYUYDCPc5IUxz sneppets\n"

} -> null

- metadata_fingerprint = "rTA67mb1xIY=" -> null

- metadata_startup_script = "sudo apt-get update; sudo apt-get install -yq build-essential python-pip rsync; pip install flask" -> null

- name = "flask-vm-903d08905252b8f2" -> null

- project = "sneppets-gcp" -> null

- self_link = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2" -> null

- tags = [] -> null

- tags_fingerprint = "42WmSpB8rSM=" -> null

- zone = "us-west1-a" -> null

- boot_disk {

- auto_delete = true -> null

- device_name = "persistent-disk-0" -> null

- mode = "READ_WRITE" -> null

- source = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/zones/us-west1-a/disks/flask-vm-903d08905252b8f2" -> null

- initialize_params {

- image = "https://www.googleapis.com/compute/v1/projects/debian-cloud/global/images/debian-9-stretch-v20191210" -> null

- labels = {} -> null

- size = 10 -> null

- type = "pd-standard" -> null

}

}

- network_interface {

- name = "nic0" -> null

- network = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/global/networks/default" -> null

- network_ip = "10.138.0.2" -> null

- subnetwork = "https://www.googleapis.com/compute/v1/projects/sneppets-gcp/regions/us-west1/subnetworks/default" -> null

- subnetwork_project = "sneppets-gcp" -> null

- access_config {

- nat_ip = "34.83.17.111" -> null

- network_tier = "PREMIUM" -> null

}

}

- scheduling {

- automatic_restart = true -> null

- on_host_maintenance = "MIGRATE" -> null

- preemptible = false -> null

}

}

# random_id.instance_id will be destroyed

- resource "random_id" "instance_id" {

- b64 = "kD0IkFJSuPI" -> null

- b64_std = "kD0IkFJSuPI=" -> null

- b64_url = "kD0IkFJSuPI" -> null

- byte_length = 8 -> null

- dec = "10393472930990438642" -> null

- hex = "903d08905252b8f2" -> null

- id = "kD0IkFJSuPI" -> null

}

Plan: 0 to add, 0 to change, 2 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

google_compute_instance.default: Destroying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2]

google_compute_instance.default: Still destroying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2, 10s elapsed]

google_compute_instance.default: Still destroying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2, 20s elapsed]

google_compute_instance.default: Still destroying... [id=projects/sneppets-gcp/zones/us-west1-a/instances/flask-vm-903d08905252b8f2, 30s elapsed]

google_compute_instance.default: Destruction complete after 37s

random_id.instance_id: Destroying... [id=kD0IkFJSuPI]

random_id.instance_id: Destruction complete after 0s

Destroy complete! Resources: 2 destroyed.

Further Reading

- How to visualize Big Data using Google Data Studio

- Build a Docker Image with a Dockerfile and Cloud Build in GCP

- Create Machine Learning model to predict online purchase conversion

- What is Data Loading and loading data into Google BigQuery

- Deploy an Application to Kubernetes running on Google Cloud Kubernetes Engine (GKE)